Dr. Aijun Zhang is a senior lead of interpretable machine learning in Corporate Model Risk at Wells Fargo. His early career in banking industry started from quantitative research in Enterprise Credit Risk at Bank of America (2008-2009) and Bank of America Merrill Lynch (2009-2013).

During 2014-2016, Dr. Zhang served as the director of Big Data Center in Education at Hong Kong Baptist University. During 2016-2020, he was a tenure-track assistant professor in Department of Statistics and Actuarial Science at University of Hong Kong, where he successfully founded HKU's elite BASc programme in Applied Artificial Intelligence.

Aijun holds BSc and MPhil degrees in Mathematics and Statistics from Hong Kong Baptist University, and PhD degree in Statistics from University of Michigan at Ann Arbor. He lives in Hong Kong with his wife and three lovely kids.

This page is last updated on Dec 15, 2021 (one year after Aijun resigned his position from University of Hong Kong). Further updates about Dr. Zhang can be found by the following channels:

- LinkedIn: https://www.linkedin.com/in/ajzhang

- Email: ajzhang@umich.edu

- Github: https://github.com/SelfExplainML

What's New

- 12/2021: PolyTraverse paper accepted by AAAI-22 AdvML Workshop

- 10/2021: SIMTree paper accepted by TKDE

- 10/2021: DesignIML paper accepted by ACM XAIF21 Workshop

- 07/2021: Stable Learning paper accepted by TKDD

- 07/2021: GAMI-Net paper accepted by Pattern Recognition

- 06/2021: SeqUD paper accepted by JMLR

- 05/2021: Invited talk at CFPB (joint with Agus Sudjianto)

- 04/2021: Invited talk at GTC 2021 (joint with Zebin Yang)

- 03/2021: Invited talk at CeFPro

- 02/2021: SteinGLM paper accepted by Neural Networks

- 01/2021: Launched Github:SelfExplainML

Research

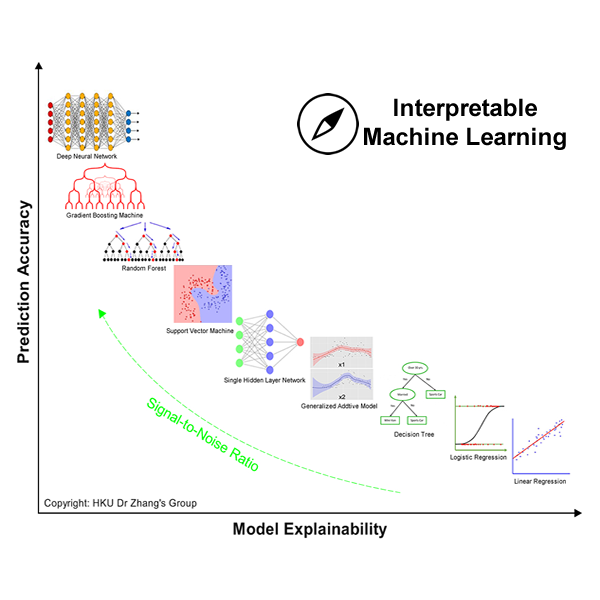

Dr. Zhang’s research interests include experimental design, machine learning and explainable artificial intelligence, as well as their real applications in banking and finance, industrial engineering, and other areas.

Some of his recent projects include automated and interpretable machine learning, big data subsampling, and L0-constrained optimization for high-dimensional and nonparametric modeling.

Papers

Under Reivew:

- Sudjianto, A., Knauth, W., Singh, R., Yang, Z. and Zhang*, A. (2020). Unwrapping the black box of deep ReLU networks: interpretability, diagnostics, and simplification. arXiv: 2011.04041

- Zhang, H., Yang, Z., Sudjianto, A. and Zhang, A. (2021). A sequential Stein’s method for faster training of additive index models. Submitted.

- Wen, C., Wang, X. and Zhang*, A. (2020). L0 trend filtering. Submitted.

- Zhang, M., Zhou, Y., Zhou, Z. and Zhang*, A. (2020). Model-free subsampling method based on uniform designs. Submitted.

- Zhang, H., Zhang, A. and Li, R. (2020). Least squares approximation via deterministic leveraging. Submitted.

- Guo, Y., Su, Y., Yang, Z. and Zhang*, A. (2020). Explainable recommendation systems by generalized additive models with manifest and latent interactions. Submitted.

Published/Accepted:

- Xu, K., Vaughan, J., Chen, J., Zhang, A. and Sudjianto, A. (2021). Traversing the local polytopes of ReLU neural networks. AAAI-2022 Workshop on Adversarial Machine Learning and Beyond. arXiv: 2111.08922

- Sudjianto, A. and Zhang, A. (2021). Designing inherently interpretable machine learning models. ACM ICAIF 2021 Workshop on Explainable AI in Finance. arXiv: 2111.01743

- Sudjianto, A., Yang, Z. and Zhang, A. (2021). Single-index model tree. IEEE Trans. on Knowledge and Data Engineering. DOI: 10.1109/TKDE.2021.3126615

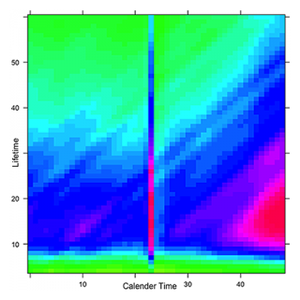

- Kuang, K., Zhang, H., Wu, F., Zhuang, Y. and Zhang*, A. (2021). Balance-subsampled stable prediction across unknown test data. ACM Trans. on Knowledge Discovery from Data, 16(3), June 2022. DOI: 10.1145/3477052

- Yang, Z., Zhang*, A. and Sudjianto, A. (2021). GAMI-Net: an explainable neural network based on generalized additive models with structured interactions. Pattern Recognition, 120, December 2021, 108192. DOI: 10.1016/j.patcog.2021.108192

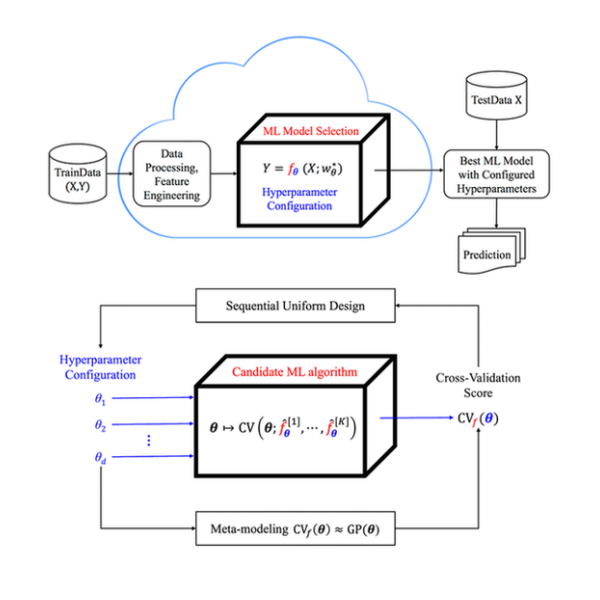

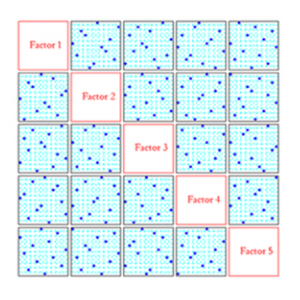

- Yang, Z. and Zhang*, A. (2021). Hyperparameter optimization via sequential uniform designs. Journal of Machne Learning Research, 22(149):1−47. Paper, Code

- Yang, Z., Zhang, H., Sudjianto, A. and Zhang, A. (2021). An effective SteinGLM initialization scheme for training multi-layer feedforward sigmoidal neural networks. Neural Networks, 139, 149-157. DOI: j.neunet.2021.02.014

- Yang, Z., Zhang*, A. and Sudjianto, A.(2021). Enhancing explainability of neural networks through architecture constraints. IEEE Trans. on Neural Networks and Learning Systems, 32(6), 2610-2621. DOI: 10.1109/TNNLS.2020.3007259.

- Zhang*, A., Zhang, H. and Yin, G. (2020). Adaptive iterative Hessian sketch via A-optimal subsampling. Statistics and Computing, 30, 1075-1090. DOI: 10.1007/s11222-020-09936-8

- Wen, C., Zhang, A., Quan, S. and Wang, X. (2020). BeSS: An R package for best subset selection in linear, logistic and Cox proportional hazards models. Journal of Statistical Software, 94(4), June 2020. DOI: 10.18637/jss.v094.i04

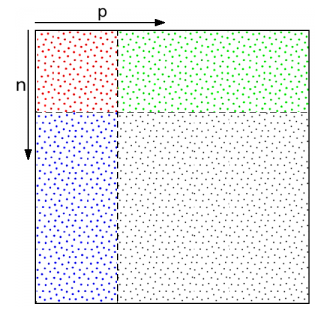

- Zhang, M., Zhang*, A. and Zhou, Y. (2020). Construction of uniform designs on arbitrary domains by inverse Rosenblatt transformation. In: Fan, J., Li, G., Li, R., Liu, M.-Q. and Pan, J. (eds.) Recent Advances in Statistics and Data Science – Festschrift in Honour of Professor Kai-Tai Fang. Springer. DOI: 10.1007/978-3-030-46161-4_7

- Zhang*, A. and Yang, Z. (2020). Hyperparameter tuning methods in automated machine learning (in Chinese). Scientia Sinica Mathematica, 50(5), 695-710. DOI: 10.1360/N012019-00092

- Yang, Z., Lin, D.K.J. and Zhang*, A. (2019). Interval-valued data prediction via regularized artificial neural network. Neurocomputing, 331, 336–345. DOI: 10.1016/j.neucom.2018.11.063

- Yang, F., Zhou, Y.-D. and Zhang, A. (2019). Mixed-level column augmented uniform designs. Journal of Complexity, 53, 23–39. DOI: 10.1016/j.jco.2018.10.006

- Zhu, J., Lv, K., Zhang, A., Pan, W. and Wang, X. (2019). Two-sample test for compositional data with ball divergence. Statistics and Its Interface, 12, 275-282. DOI: 10.4310/SII.2019.v12.n2.a8

- Tao, L., Ip, H.S., Zhang, A. and Shu, X. (2016). Exploring canonical correlation analysis with subspace and structured sparsity for web image annotation. Image and Vision Computing, 54, 22-30. DOI: 10.1016/j.imavis.2016.06.008

- Dillard, A.J., Ubel, P. A., Smith, D. M., Zikmund-Fisher, B. J., Nair, V., Derry, H. A., Zhang, A., Pitsch, R. K., Alford, S. H., McClure, J. B., Fagerlin, A. (2011). The distinct role of comparative risk perceptions in a breast cancer prevention program. Annals of Behavioral Medicine, 42(2), 262-268. DOI: 10.1007/s12160-011-9287-8

- Nair, V., Strecher, V., Fagerlin, A., Ubel, P., Resnicow, K., Murphy, S., Little, R., Chakraborty, B. and Zhang, A. (2008). Screening experiments and the use of fractional factorial designs in behavioral intervention research. American Journal of Public Health, 98, 1354-1359. DOI: 10.2105/AJPH.2007.127563

- Sudjianto, A., Nair, S., Yuan, M., Zhang, A., Kern D. and Cela-Diaz, F. (2010). Statistical methods for fighting financial crimes. Technometrics, 52, 5-19. DOI: 10.1198/TECH.2010.07032

- Zhang, A. (2009). Statistical Methods in Credit Risk Modeling. PhD Dissertation (Co-advisors: Vijay Nair and Agus Sudjianto), University of Michigan. URL

- Wu, Z.-L., Zhang, A., Li, C.-H. and Sudjianto, A. (2008). Trace solution paths for SVMs via parametric quadratic programming. In: Proceedings of KDD DMMT’2008. ACM Press. URL

- Sudjianto, A., Cela-Diaz, F., Zhang, A., Yuan, M. (2007). Anomaly detection in high-dimensional financial databases. In: Proceedings of MLMTA’2007. CSREA Press. URL

- Zhang, A. (2007). One-factor-at-a-time screening designs for computer exper- iments. SAE Technical Paper, 2007-01-1660. DOI: 10.4271/2007-01-1660

- Fang, K.-T., Zhang, A. and Li, R. (2007). An effective algorithm on generation of factorial designs with generalized minimum aberration. Journal of Complexity, 23, 740-751. DOI: 10.1016/j.jco.2007.03.010

- Zhang, A. (2005). Schur-convex discrimination of designs using power and exponential kernels. In: Fan, J. and Li, G. (eds.) Contemporary Multivariate Analysis and Design of Experiments – In Celebration of Professor Kai-Tai Fang’s 65th Birthday, 293–311. World Scientific Publisher. DOI: 10.1142/9789812567765_0018

- Zhang, A., Fang, K.-T., Li, R. and Sudjianto, A. (2005). Majorization framework for balanced lattice designs. Annals of Statistics, 33, 2837-2853. DOI: 10.1214/009053605000000679

- Zhang, A. (2004). Majorization Methodology for Experimental Designs. MPhil Dissertation (Advisor: Kai-Tai Fang), Hong Kong Baptist University. URL

- Fang, K.-T. and Zhang, A. (2004). Minimum aberration majorization for non-isomorphic saturated designs. Journal of Statistical Planning and Inference, 126, 337-346. DOI: 10.1016/j.jspi.2003.07.015

- Zhang, A., Wong, R.N.S., Ha, A.W.Y., Hu, Y.H., and Fang, K-T. (2003). Authentication of traditional Chinese medicines using RAPD and functional polymorphism analysis. In: Fang KT, Liang YZ, Yu RQ, (eds.) Proceedings of the 1st Conference on Data Mining and Bioinformatics in Chemistry and Chinese Medicines, 81-98.

- Zhang, A., Wu, Z.-L., Li, C.-H. and Fang, K.-T. (2003). On Hadamard-type output coding in multiclass learning. In: Liu, et al. (eds.) Intelligent Data Engineering and Automated Learning, 397-404. Springer-Verlag. DOI: 10.1007/978-3-540-45080-1_51

Packages

- Python package Alethiea: A Novel Tookkit for Unwrapping ReLU DNNs. Available at https://github.com/SelfExplainML/Aletheia

- Python package GAMI-Net: Generalized Additive Model with Structured Interactions. Available at https://github.com/SelfExplainML/GamiNet

- Python package ExNN: Enhanced Explainable Neural Networks. Available at https://github.com/SelfExplainML/ExNN

- Python package SeqUD: Sequential Uniform Designs. Available at https://github.com/SelfExplainML/SeqUD/

- R package UniDOE: Uniform Design of Experiments. Available at https://CRAN.R-project.org/package=UniDOE

- R package AMIAS: Alternating Minimization Induced Active Set Algorithms. Available at https://CRAN.R-project.org/package=AMIAS

- R package BeSS: Best Subset Selection in Linear, Logistic and CoxPH Models. Available at https://CRAN.R-project.org/package=BeSS

Teaching

- Fall 2020, STAT3612 Statistical Machine Learning, HKU

- Spring 2020, STAT3622 Data Visualization, HKU

- 2017-2019, STAT3612 Data Mining, HKU

- 2016-2019, STAT3622 Data Visualization, HKU

- Spring 2016, STAT3980/MATH4875 Selected Topics in Statistics, HKBU

- Fall 2015, Statistics in Banking and Finance, SUSTech (as guest instructor)

- Fall 2014, GCNU1025 Numbers Save the Day, HKBU

- Fall 2012, SCIT1020 The Power of Statistics, BNU-HKBU UIC (as guest instructor)

- 2004 - 2006, STATS350 Introduction to Statistics and Data Analysis, UM (as GSI)